It is always interesting to zoom in on the history of a single AWS service or feature and watch how it has evolved over time in response to customer feedback. For example, Amazon Elastic Block Store (EBS) launched a decade ago and has been gaining more features and functionality every since. Here are a few of the most significant announcements:

- August 2008 – We launched EBS in production form, with support for volumes of up to 1 TB and snapshots to S3.

- September 2010 – We gave you the ability to Tag EBS Volumes.

- August 2012 – We launched Provisioned IOPS for EBS volumes, allowing you to dial in the level of performance that you need.

- June 2014 – We gave you the ability to create SSD-backed EBS volumes.

- March 2015 – We gave you the ability to create EBS volumes of up to 16 TB and 20,000 IOPS.

- April 2016 – We gave you New cold storage and throughput options.

- June 2016 – We gave you the power to create Cross-account copies of encrypted EBS snapshots.

- February 2017 – We launched Elastic Volumes, allowing you to adjust the size, performance, and volume type of an active, mounted EBS volume.

- December 2017 – We gave you the ability to create SSD-backed volumes that deliver up to 32,000 IOPS.

- May 2017 – We launched Cost allocation for EBS snapshots so that you can assign costs to projects, departments, or other entities.

- April 2018 – We gave you the ability to Tag EBS snapshots on creation and to Use resource-level permissions to implement stronger security policies.

- May 2018 – We announced that encrypted EBS snapshots are now stored incrementally, resulting in a performance improvement and cost savings.

Several of the items that I chose to highlight above make EBS snapshots more useful and more flexible. As you may already know, it is easy to create snapshots. Each snapshot is a point-in-time copy of the blocks that have changed since the previous snapshot, with automatic management to ensure that only the data unique to a snapshot is removed when it is deleted. This incremental model reduces your costs and minimizes the time needed to create a snapshot.

Because snapshots are so easy to create and use, our customers create a lot of them, and make great use of tags to categorize, organize, and manage them. Going back to my list, you can see that we have added multiple tagging features over the years.

Lifecycle Management – The Amazon Data Lifecycle Manager

We want to make it even easier for you to create, use, and benefit from EBS snapshots! Today we are launching Amazon Data Lifecycle Manager to automate the creation, retention, and deletion of Amazon EBS volume snapshots. Instead of creating snapshots manually and deleting them in the same way (or building a tool to do it for you), you simply create a policy, indicating (via tags) which volumes are to be snapshotted, set a retention model, fill in a few other details, and let Data Lifecycle Manager do the rest. Data Lifecycle Manager is powered by tags, so you should start by setting up a clear and comprehensive tagging model for your organization (refer to the links above to learn more).

It turns out that many of our customers have invested in tools to automate the creation of snapshots, but have skimped on the retention and deletion. Sooner or later they receive a surprisingly large AWS bill and find that their scripts are not working as expected. The Data Lifecycle Manager should help them to save money and to be able to rest assured that their snapshots are being managed as expected.

Creating and Using a Lifecycle Policy

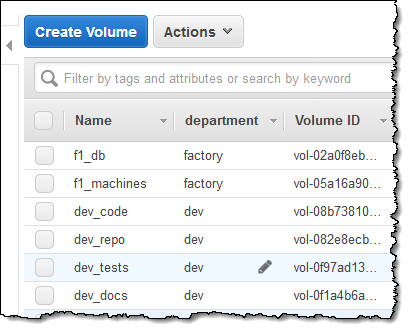

Data Lifecycle Manager uses lifecycle policies to figure out when to run, which volumes to snapshot, and how long to keep the snapshots around. You can create the policies in the AWS Management Console, from the AWS Command Line Interface (CLI) or via the Data Lifecycle Manager APIs; I’ll use the Console today. Here are my EBS volumes, all suitably tagged with a department:

I access the Lifecycle Manager from the Elastic Block Store section of the menu:

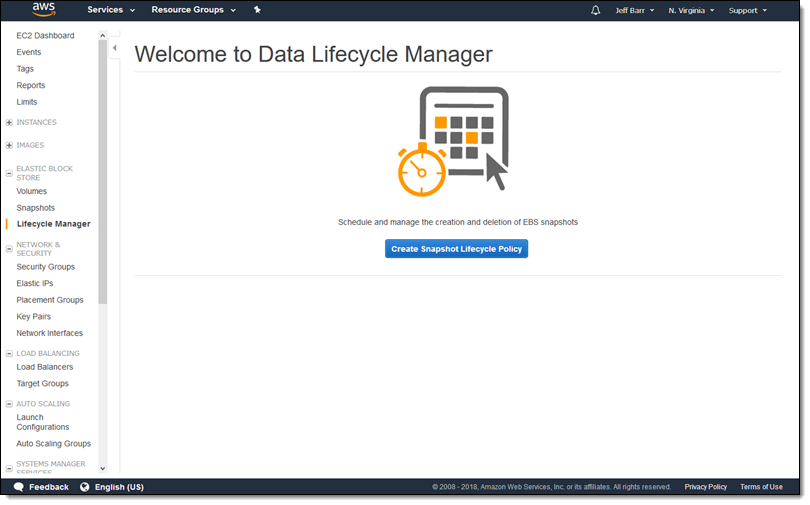

Then I click Create Snapshot Lifecycle Policy to proceed:

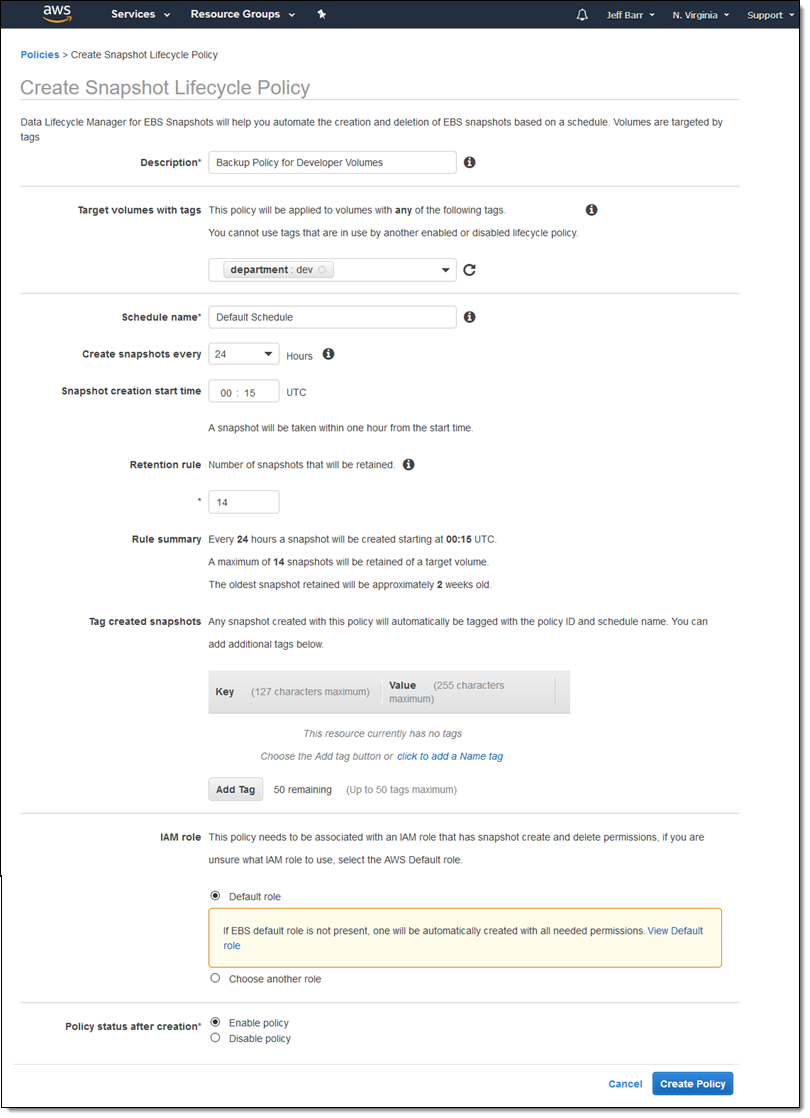

Then I create my first policy:

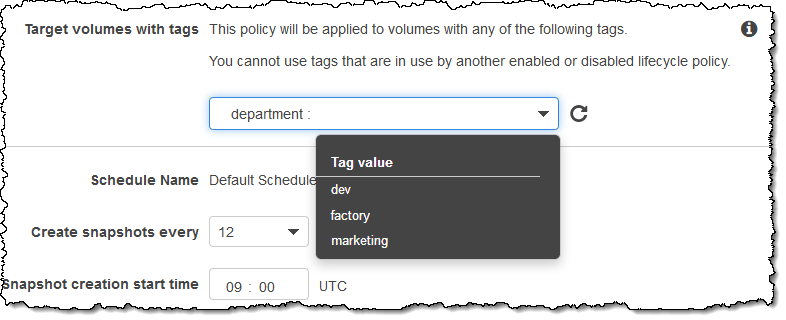

I use tags to specify the volumes that the policy applies to. If I specify multiple tags, then the policy applies to volumes that have any of the tags:

I can create snapshots at 12 or 24 hour intervals, and I can specify the desired snapshot time. Snapshot creation will start no more than an hour after this time, with completion based on the size of the volume and the degree of change since the last snapshot.

I can use the built-in default IAM role or I can create one of my own. If I use my own role, I need to enable the EC2 snapshot operations and all of the DLM (Data Lifecycle Manager) operations; read the docs to learn more.

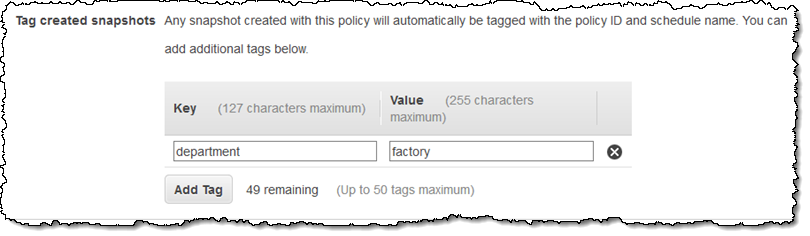

Newly created snapshots will be tagged with the aws:dlm:lifecycle-policy-id and aws:dlm:lifecycle-schedule-name automatically; I can also specify up to 50 additional key/value pairs for each policy:

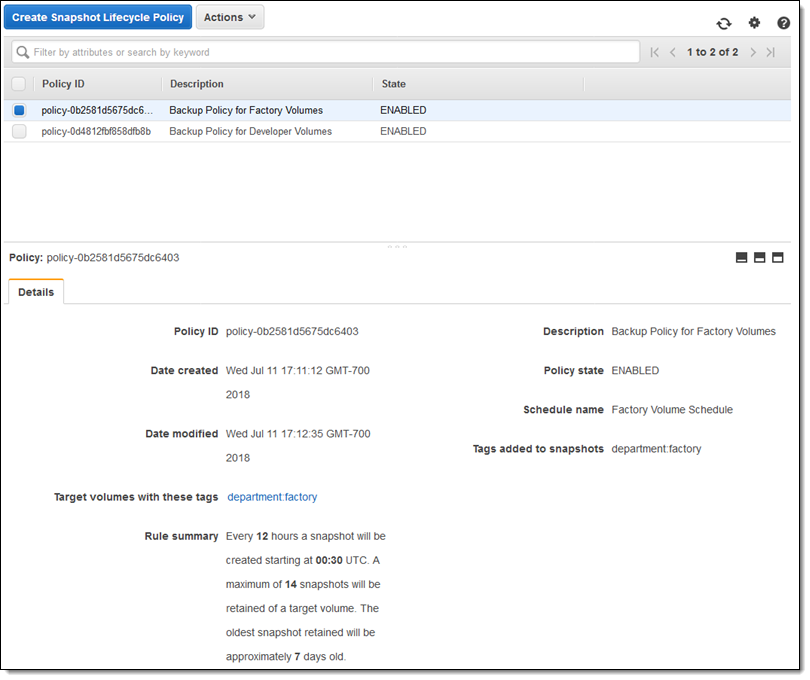

I can see all of my policies at a glance:

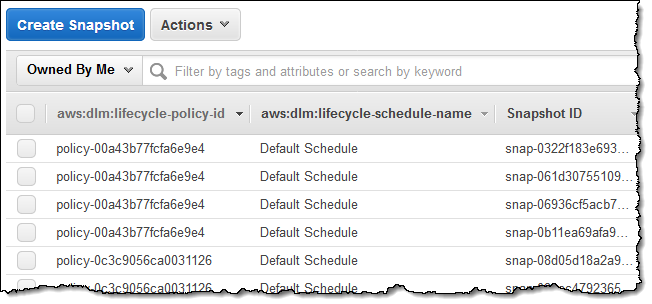

I took a short break and came back to find that the first set of snapshots had been created, as expected (I configured the console to show the two tags created on the snapshots):

Things to Know

Here are a couple of things to keep in mind when you start to use Data Lifecycle Manager to automate your snapshot management:

Data Consistency – Snapshots will contain the data from all completed I/O operations, also known as crash consistent.

Pricing – You can create and use Data Lifecyle Manager policies at no charge; you pay the usual storage charges for the EBS snapshots that it creates.

Availability – Data Lifecycle Manager is available in the US East (N. Virginia), US West (Oregon), and Europe (Ireland) Regions.

Tags and Policies – If a volume has more than one tag and the tags match multiple policies, each policy will create a separate snapshot and both policies will govern the retention. No two policies can specify the same key/value pair for a tag.

Programmatic Access – You can create and manage policies programmatically! Take a look at the CreateLifecyclePolicy, GetLifecyclePolicies, and UpdateLifeCyclePolicy functions to get started. You can also write an AWS Lambda function in response to the createSnapshot event.

Error Handling – Data Lifecycle Manager generates a “DLM Policy State Change” event if a policy enters the error state.

In the Works – As you might have guessed from the name, we plan to add support for additional AWS data sources over time. We also plan to support policies that will let you do weekly and monthly snapshots, and also expect to give you additional scheduling flexibility.